Position: Solve Layerwise Linear Models First to Understand Neural Dynamical Phenomena (Neural Collapse, Emergence, Lazy/Rich Regime, and Grokking)

This talk is a preview of an ICML 2025 position paper presentation

This talk is a preview of an ICML 2025 position paper presentationAbstract

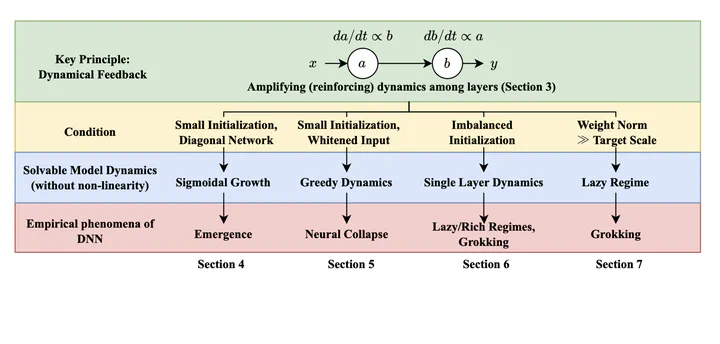

In physics, complex systems are often simplified into minimal, solvable models that retain only the core principles. In machine learning, layerwise linear models (e.g., linear neural networks) act as simplified representations of neural network dynamics. These models follow the dynamical feedback principle, which describes how layers mutually govern and amplify each other’s evolution. This principle extends beyond the simplified models, successfully explaining a wide range of dynamical phenomena in deep neural networks, including neural collapse, emergence, lazy and rich regimes, and grokking. In this position paper, we call for the use of layerwise linear models retaining the core principles of neural dynamical phenomena to accelerate the science of deep learning.

We are excited to see the talk one week in advance! If you seek for more detail, please refer to the ArXiv link.

Also, note that

- the speaker has also authored another paper in NeurIPS 2024 which was the topic of a recent talk.

- speakers from two recent talks (one, two) are co-authors of this ICML 2025 position paper.