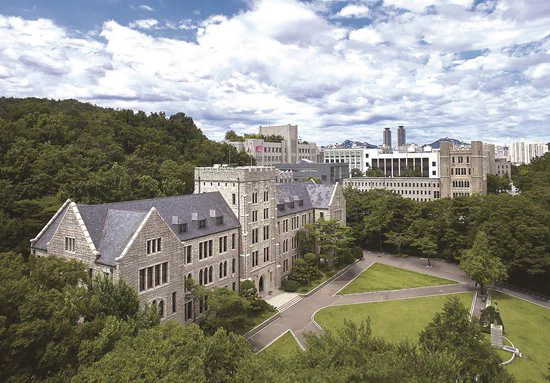

AI+Math Lab @ Korea

In AIML@K, we expand the frontier of artificial intelligence with mathematics through researching, teaching, and outreaching for the human race.

Select Publications

Research Partners

Latest

LFNO: Bridging Laplace and Fourier for Effective Operator Learning

How to merge the goodness of Laplace Neural Operator and Fourier Neural Operator?

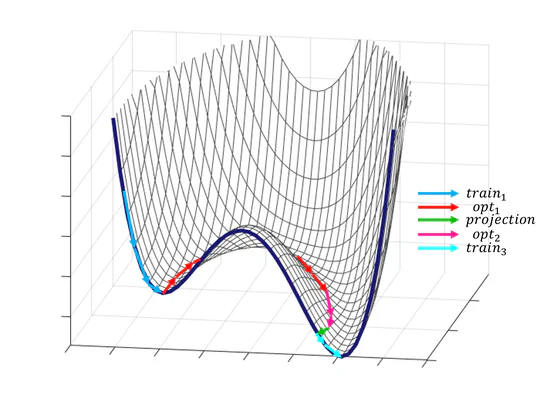

Bypass and Beyond: Extension–Contraction Strategies for Escaping Training Stagnation and Achieving Lossless Pruning

How well can algebraically grounded methods tackle optimization and model compression challenges in deep learning?

NeurIPS 2025: Four Workshop Papers

🎉🎉🎉🎉 AIML@K contributes four workshop papers to NeurIPS 2025!

Kudos to Taehun, Jeung-un, Suhyun, and our external collaborators!

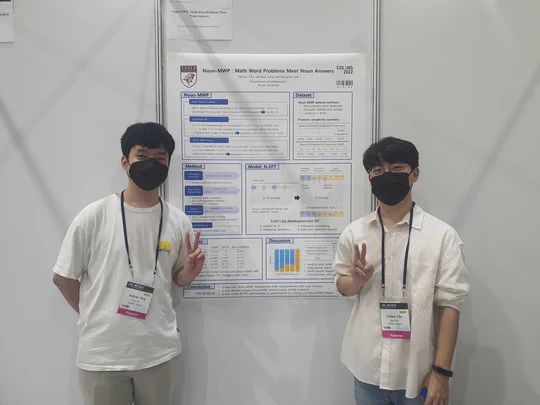

Two New Masters Graduating from AIML@K!

Congratulations to our two newly minted Masters! May the Force be with you as you embark on your next adventure.

ICCV 2025: Two Workshop Papers

🎉🎉🎉 AIML@K contributes two workshop papers to ICCV 2025!

Kudos to Jaeheun, Jaehyuk, Yeajin, Bosung, and Suhyun!

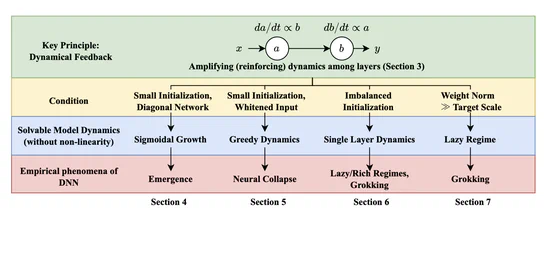

Position: Solve Layerwise Linear Models First to Understand Neural Dynamical Phenomena (Neural Collapse, Emergence, Lazy/Rich Regime, and Grokking)

Can layerwise linear models simplify complex neural network dynamics and speed up deep learning research?

Korea University Physics Prof. Alex Rothkopf’s Talk

The talk will be about AI and quantum computing

Autoformalization and Automated Theorem Proving with Language Models

Can language models translate natural language math into formal proofs (autoformalization) and more?

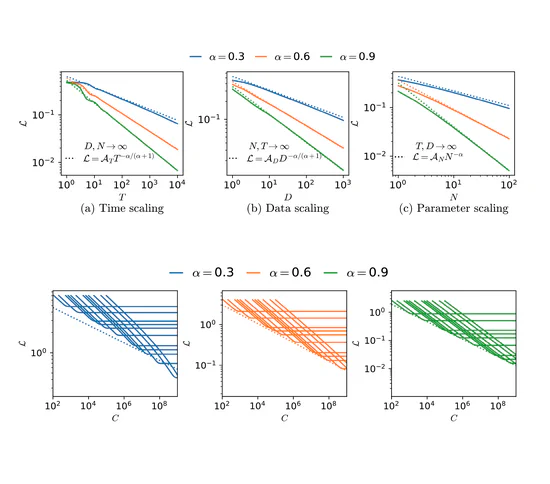

An Exactly Solvable Model for Emergence and Scaling Laws in the Multitask Sparse Parity Problem

Can an analytically solvable model explain emergent behaviors and scaling laws in multitask learning, showing how new skills appear as training progresses?

ICML 2025: One Main Track Paper and Four Workshop Papers Accepted

🎉🎉🎉🎉🎉 AIML@K members are presenting one main track paper and four workshop papers in ICML 2025! Congratulations!

Formalizing Mathematics: Why and How

Formalization in mathematics – translating math into computer-readable language – is growing into successful projects!

Three AIML@K Students Appointed as TAs for the University-wide Python Course GECT 002

We are proud to announce that three students from AIML@K have been selected as Teaching Assistants (TAs) for the university-wide introductory Python programming course “Software Programming Basics” (SW프로그래밍의 기초).

Two New Masters Graduating from AIML@K!

TWO new masters have just graduated! Congratulations, and may the force be with you!

Taehun Cha Wins the First Place from Concordia Contest @ Neurips 2024

We are glad to announce that Taehun Cha won the first place in the Concordia Contest @ NeurIPS 2024! 🎉

AIML@K 2024 Fall Workshop

The 5th AIML@K Workshop will take place on September 27, 2024

Four AIML@K Students Serve as TAs for Korea University’s First University-wide Data Science and Artificial Intelligence Course

We are proud to announce that four AIML@K students are selected in the inaugural recruitment of highly skilled teaching assistants (TAs) for Korea University’s first university-wide course on Data Science and Artificial Intelligence. Selected through a rigorous university-wide recruitment process, these TAs are integral to the launch and success of this pioneering course.

One New Master Graduating from AIML@K!

ONE new master have just graduated! Congratulations, and may the force be with you!

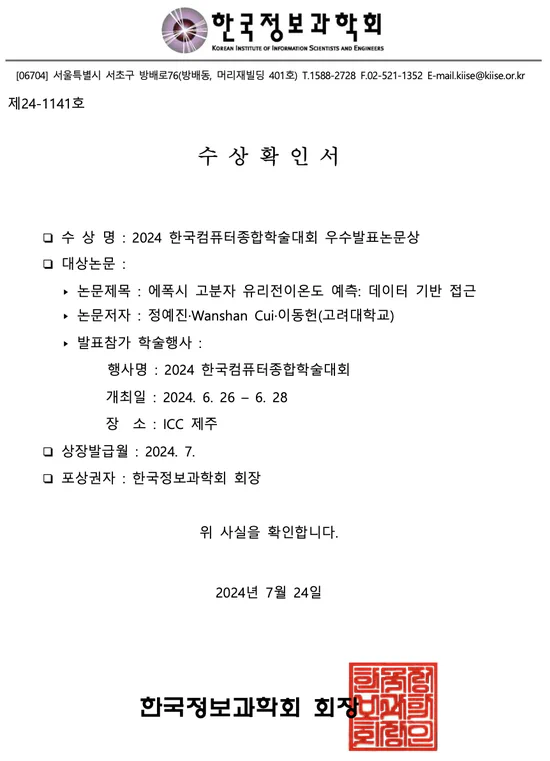

AIML@K Students Receives Best Paper Award at KCC 2024!

We are thrilled to announce that two of our students from the AIML@K program have been awarded the prestigious Best Paper Award at Korea Computer Congress (KCC; 한국컴퓨터종합학술대회) 2024. This remarkable achievement highlights their exceptional research and dedication into their award-winning paper, titled “A Data-driven Approach for Predicting Glass Transition Temperature of Epoxy Polymers”.

AIML@K Spring 2024 Workshop

The 4th AIML@K Workshop takes place!

Four AIML@K students serve as TAs for Korea University’s first university-wide Python course

We are delighted to provide so many talented teaching assistants, selected via university-wide open recruiting, for Korea University’s first university-wide Python course. The course, titled “Software Programming Basics” (SW프로그래밍의 기초), is one of the mandatory courses for all first-years in Korea University.

Four New Masters Graduating from AIML@K!

FOUR new masters have just graduated! Congratulations, and may force be with you all!

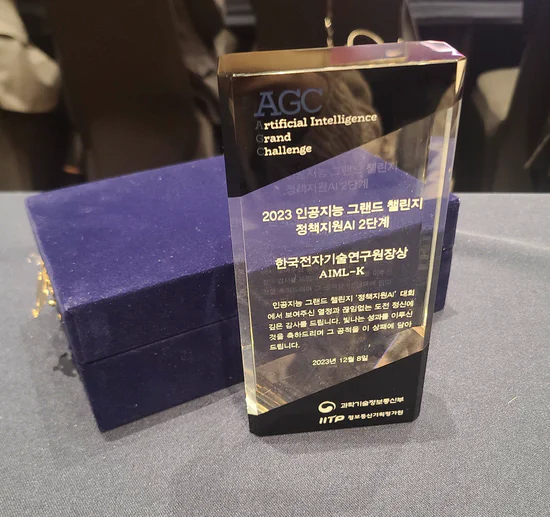

AIML@K Ranks National Seventh in AI Grand Challenge Stage 2 Competition

CONGRATULATIONS to all participants of team ‘aimlk’, for securing the seventh place at the 6th AI Grand Challenge, Stage 2!

AIML@K 2023 Fall Workshop

The 3rd AIML@K Workshop takes place!

One New Master Graduating from AIML@K!

One new master has just graduated! Congratulations, and may force be with you!

AIML@K Ranks National Top 2 in AI Grand Challenge Open Track Competition

CONGRATULATIONS to all participants of team ‘aimlk’, for valiantly competing with AI tech companies and computer science research labs from all over South Korea and winning the second place at the 6th AI Grand Challenge, Open Track!

AIML@K 2023 Spring Workshop

The 2nd AIML@K Workshop takes place!

AIML@K Ranks Seventh in 6th AI Grand Challenge, Stage 1 Competition

CONGRATULATIONS to all participants of team ‘aimlk’, for ranking national top 7 in 6th AI Grand Challenge Stage 1 Competition! It was a fierce competition with AI tech companies and computer science research labs from all over South Korea.

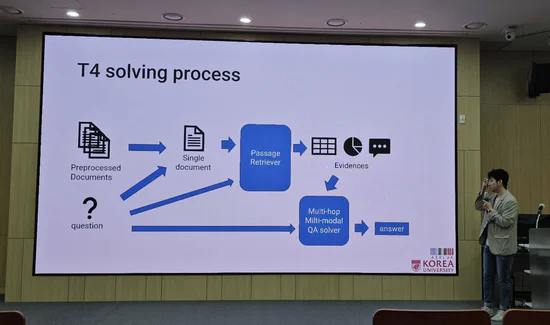

AIML@K Ranks Sixth in 5th AI Grand Challenge, Stage 1 Competition

CONGRATULATIONS to all participants of team ‘AIL-K’, for ranking national top sixth in 5th AI Grand Challenge Stage 1 Competition! It was our first participation to AI Grand Challenge – a series of largest government-hosted AI competition since 2017.